Proposal: A Human Augment Space, v0.1

Long-promised exposition on WTF this thing even is really

First off - the elephant in the room: it’s been an embarrassingly long time since the last post here - the one where I promised a plan. I started this post almost a year ago, then took a detour to explore some of these ideas in fiction, but it went better than I expected and thus resulted in more of a lacuna than intended.

I’m probably not going to finish that expedition any time soon, so I’m back to continue to argue the case, this time with some concrete suggestions of where we can go from here.

-N

The first few posts on Augmented Realist have teed up the problem, but if you’re new here, here’s the TL;DR:

The world’s largest companies are racing to create vertically-integrated augmented reality systems. Those systems, because of their form factor, will eat all computing.

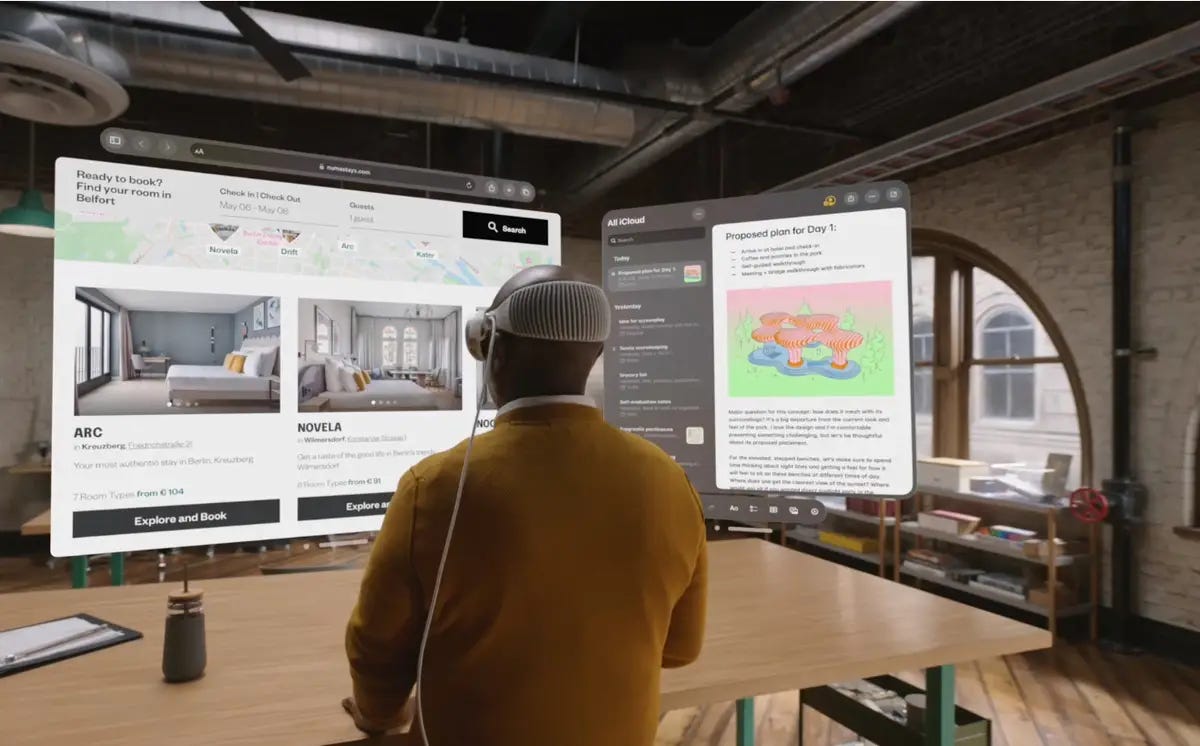

In fact, all screen-based activity, computing and otherwise, will be rolled up into AR. Nobody needs a TV or a computer or a smartphone or a watch or even signage when you already have a screen between you and everything you can see. This includes entertainment, work, browsing, and anything with an interface - all are better served, cheaper, by AR’s ability to put pixels anywhere in your line of sight than they are now by the screens and printed material all over the physical world.

If, as seems likely, only a handful of these companies capture a lion’s share of the AR market, the rules of their ecosystems will determine what is possible for almost every user in almost every digital activity.

This might call to mind Apple’s total control of iOS, which seems like a tolerable kind of prison, but remember that no matter how locked down the App Store gets, an iPhone can still access the web. And while Apple’s WebKit - the only engine iOS browsers can use - is opinionated and limited in what it supports, at least you don’t need Apple’s approval to create or update a webpage, as you do with an iOS app. And, what’s more, webpages (‘web apps’) can be made to do almost anything an app can do, in a mobile-native way.

This is an important point: no matter how locked down smartphones or computers are today, they still can access the web, which is a mostly free environment for expression and commerce and executable code.

AR is another paradigm entirely - its immersive, context-aware, spatial experiences are only possible on AR hardware. Unlike today’s web, which is accessible even on lightweight devices like refrigerators, watches, cars, etc, tomorrow’s AR won’t work outside of the systems made to enable it specifically.

That means anything created for AR, whether applications, games, art, reference, networking - any of it - will only be accessible in AR, lacing it into a chicken-egg relationship with the hardware we’re just now seeing on the market.

The way things are going, there will be no analogue to the wide-open web for augmented reality. The only AR-native activities users will be able to undertake, and developers will be able to create, will be those sanctioned by, and taxed by, the Metas and Googles and Apples of the world. All computing activity must be co-signed by the platform creators.

Concerns over digital rights aren’t nice-to-haves or policy-wonk wish list items anymore. We do everything online, and the often-horrific examples of what happens when you don’t have privacy and self-determination on the internet are piling up.

When your computer (AR glasses or contacts or implants) is in between you and the world, then everything you do is on your computer, and today’s problems are magnified a thousandfold. Imagine Facebook (Meta) or Google or Apple running not just the software that powers your whole computer / smartphone / smartwatch, but the software and hardware you wear in front of your eyes as you go about your entire life.

WHAT ABOUT THE WEB

When, not if, augmented reality eats all computing, it won’t make the web we have today go away. Flat ‘screens’ still work in AR, and flat-screen experiences are even more ergonomic and convenient when you can put the screen anywhere, at any size.

But, despite some heroic efforts to make it so for a very long time by some very smart people, the web we have today is not going to provide the backbone for a real AR-first internet.

The fundamental issue is the unsuitability of the DNS to connect real, physical people, places, and things to digital payloads, but some even doubt that TCP/IP is up to the task of moving the necessary bits about to do the job in AR.

Even if the infrastructure of the web were better suited to the use cases and workloads of AR, the web is poorly suited to furthering privacy, openness, transparency, and decentralization. The aforementioned involvement in all of your shit makes that a dealbreaker in AR.

We need a solution purpose-built to both reflect our values and to provide a backbone to an AR-first internet.

BACKGROUND

If you’re new here, a completionist, or if you want to catch up further before proceeding, get the full background on the stakes with:

To get an expansive view of what augmented reality might evolve into and why it matters, check out:

For a bit more about why private solutions to this anchoring problem aren’t in our best interests:

To understand the efforts currently underway by digital landlords to rent-seek in AR:

OK ... Caught up? Bought in?

I’ve teased this enough, and I’ve fired some shots from cover. Time to put the plan across and solicit feedback. I’ll go through each section of this stack quickly and then unpack and refine each bit in more detailed articles later on.

A REMINDER

The AR use case I’m targeting here is the one expounded on in What is Augmented Reality (For).

In brief: while it’s natural to first conceive of single-purpose augmented reality applications - things like HUDs, games, and tools - the much more profound thing I’m trying to unlock is the ability for anyone to attach any digital thing to any real thing, person, or place much as we already do with concepts, ideas, and language.

That ‘augment space’ or ‘A Space’ idea (placeholder title - ‘world wide web’ and ‘internet of things’ are taken … suggestions welcome) is where the real power of AR lives.

THE PROPOSITION

I propose we create a means for anyone to attach any digital thing to any real thing, and for users to access those digital things in a way that reflects our values.

We’d create a means for any device to compute a mostly-deterministic but meaningless identifier (a hash like, perhaps, a UUID) for any real thing.

With this hash, a device could ask the service of the user’s choice to describe that thing in language - most usefully in nouns and adjectives.

With this description, a device could then use the service of the user’s choice to search, or suggest (AI Clippy), or ask for recommendations for digital things (augments) that are attached to that real thing or to the language used to describe it.

With those augments, a filter of the user’s choice (Clippy again) could narrow down the enormous number of augments available to only those that might be helpful to that user in the current context.

With all this choice - choice of how to label the world with language, choice of how to search A Space with that language, choice of what bubbles up, choice of browsers with which to view it, and thus the possibility to create and access content on a choice of hardware, we’d retain some of the competition and freedom we have on the web, and we’d unlock the most natural, frictionless way to communicate since the spoken word.

HOW, v0.1

Let’s look at this through an end user’s use-case.

You are wearing ‘gear’ (a headset, contacts, an implant, etc.). In your field of view is a can of Coke.

In A Space, ‘on top of’ that can of Coke — anchored to it — are millions of augments: digital notes, assets, and executable created by anyone with something to say about a can of Coke, or soda, or cans, the Coca-Cola company, or capitalism, tooth decay, etc.

Depending on your context and preferences, you may want to see one, some, or none of those right now.

Let’s examine what happens to make that possible.

This design is intentionally modular - the intent is to create a series of interfaces, allowing users, developers, nonprofits, governments, consortia, and corporations to supply competing solutions at every link in the chain.

The design embraces subjectivity and mutability — it assumes no consensus on choice of language, use of that language, choice of technology, etc. It allows the user to compose, hierarchically, a mixture of authorities and to trust only some or none, contextually.

Also intentional is the compartmentalization of information — as much as possible, information is processed on your local device and filtered in local processes, preventing the need to report everything in your field of view to a third party or cloud service, or to leak your preferences and behaviors.

So here’s how it goes, cartoonishly simplified - we’ll fill in the details and edge cases later:

1: SEGMENTATION and PERCEPTUAL HASHING

INPUT: COLOR and DEPTH SENSING

OUTPUT: A HASH, or UNIQUE ID

Your gear is locally running a confection of machine learning models. These models accept information from your gear’s sensors - color camera(s), depth sensors, perhaps others. Those models segment the scene into individual objects, plants, animals, people, etc.

For each object in the scene around you, the models together generate a unique hash, or an ID. That hash could be an encoding of the three-dimensional shape of the object via something like a NeRF. It could include color information and scale as well. It might even involve that object‘s location in its context or on Earth in general.

This hash is computed locally, on-device, privately, and more or less deterministically, at least enough for hash-space nearest-neighbor calculations to say with some confidence that two hashes are or aren’t of the same thing.

2: CASCADING LOOKUP and MAPPING

INPUT: A HASH

OUTPUT: NATURAL LANGUAGE

With the locally-computed hash for an object, your device calls out to a series of lookup servers - a service known as a ‘say', cascading through them to build up a description of the hash in natural language.

The lookup sequence begins by checking first in your personal say, which runs its private bit locally, and which may contain public or private descriptions of the people, places, and things of the world. An example might be that you might publicly or privately label another person ‘BFF’ or ‘distasteful’ or ‘cousin by marriage’ or ‘Ted Nugent’.

You’d then move down your say cascade of trust. Perhaps next on your list would be your circle of friends and family, wherein a personal and potentially private view of the world is encoded.

Next in the lookup might be a mapping maintained by group to which you belong - eg an endpoint provided by your business that stores trade secrets, or a mapping from a military unit, or from a government agency like NOAA or NASA, from a religious or ideological group like a church, a secret society, etc.

Finally, you might fall through to pick up a more baseline definition from a major say provider like (hypothetically) Google or Wikipedia.

One could even imagine a system whereby your cascade differs depending on the subject matter.

As an example, perhaps for mappings where your baseline service identifies the hash as being foremost a sort of ‘bird’, you’d then go back to reach for more specific labeling from the Cornell Ornithology Lab say, overriding anything you got from Google because you trust Cornell more to get it right when it comes to birds.

Importantly, this modular system acknowledges a critical reality: language is personal, subjective, and something on which there will never be consensus, yet language is the vehicle through which we organize, search for, and create information. The labeling of the world post-Babel has been done a billion times over, differently each time, and is not something we can delegate to a team at a FAANG or a crowdsourced dataset to decide for all of us.

Further, this mapping from hashes to language enables creators of augments to attach their payloads to ideas and to broad categories of things for which they don’t necessarily have the hash at the time of publishing. You might, for example, want to attach an augment to all ‘breads’, or ‘cosmetics’, or ‘jetskis’, etc.

It should be equally possible to attach augments directly to single hashes, as we’ll discuss further.

3: SEARCH, SUGGESTION, RECOMMENDATION, FILTERING, DISCOVERY

INPUT: NATURAL LANGUAGE

OUTPUT: ADDRESSES (URLs) of AUGMENT RESOURCES

Now, for a given object - a person, place, or thing, you’ve got a cloud of words from sources you trust. Our say check for this can of Coke returned [soda, Coke, Coca-Cola, drink, beverage, water, CO2, can, high fructose corn syrup, water, acetic acid, caffeine, caramel color, carbonated, red, white, aluminum, acidic, unhealthy, high glycemic, sweet].

Our system now takes this language to our ‘head’ - a personal AI agent capable of searching and filtering on our behalf. In the system I’m proposing, a head wouldn’t be required, but I’m positing it as almost indispensable because of the nature of augmented reality.

On the web, we formulate searches, one at a time, in written language, and then scroll through the results - mostly words and a few images - at the leisurely pace at which we’re comfortable, on a small screen in fixed proximity to our bodies.

In a mature A Space, we’re sharing space with millions of augments at all times, with each and every thing, person, idea, and place around us suffused with writings, media, and code created by every person who ever had a thing to say, show, or make about that idea, plus a shitload of graffiti and SPAM. (This is the ‘animism’ part of Towards a Digital Animism.)

We’re interacting with this A Space while trying to use our bodies in the real world. We might be driving a car, or running, or trying to have a meaningful conversation, or alone with our thoughts. It’s hard to prove a negative, but I believe there is no UI solution that allows us to drink from that firehose and select among the nearly infinite options while doing anything else at all, especially not using our bodies for locomotion or labor or lovemaking etc.

In the here and now, we’ve been dipping our toe in this kind of delegation for more than a decade anyway, handing off more and more of the early filtering to recommender services, be they the ‘algorithms’ in streaming services, social networks, or mapping services, the ranking algorithms that sort search results for us and bubble up salient elements into ‘answer boxes’, or, increasingly, the hallucinations of LLMs.

So I’m not too out on a limb here by saying the way things are going, I anticipate we’ll each be running a (local, private, fine-tuned, context-aware) ‘head’ that acts as our research assistant, personal assistant, receptionist, editor, etc., and not just for AR, but for what reaches our ‘inboxes’ as well.

So it’s your head that takes this natural language returned from your says and runs it out to any number of services we prefer as a user - things we’d recognize as ‘search engines’ from the web, recommender systems private to any of the groups to which we belong, other AIs, etc., looking for augments that might be worth your attention.

4: PRESENTATION

INPUT: AUGMENTS

OUTPUT: AUGMENTS

Lastly, we have something resembling a browser - something that can present augments. Exactly what this software does, what hardware it supports, what augments it supports - is another opportunity for spirited competition in a system like this.

ROAST MY IDEA

OK those are the beats. The broad strokes.

Subscribe below to get a ping next issue, where we’ll start to dig into the specifics, edge cases, and possibilities in some of these components.

[ED NOTE: I’m going to experiment with turning on comments for this post because I want and need feedback - the critical kind - on this outline.

What am I missing? Pitfalls? Opportunities? Help me with the name too.]

Exciting stuff! Love the thinking and strings of logic. Helped me envision how I’ll teach Spatial Delivery to my kids

Another great one. In the brave new A-Spaces, we'll quickly move from freedom of speech to a "right to label". In language we have freedom of expression, but also concepts like liable and slander. Do we really have the right to stick anything ON anyone, and do you want that? How do we manage Ownership of our own surfaces, with multiple overlapping lenses upon them?

Of course we all have to wrestle with labels beyond our control: “Nerd”, “Bro”, “American”, “Immigrant”. But it feels more personal when pinned to our body (plus platform specific) and the viewer sees it but we don’t. It reminds me of the way text was used on social forums to hijack a (((name))) of jewish people. Now you can do it to a body or to someones house. How do we ensure an augment in A-Space is not a scarlet letter “A” that has been up-voted by the most other people in the community who share our view.